This is an exciting week for those that follow the Cisco UCS platform with announcements around the Fabric Infrastructure, the Compute and the Unified Management. With Intel’s E5 Romley processor launch this week, that is sure to grab a lot of attention, the announcements around Cisco UCS Fabric Infrastructure and UCS Management might have an even bigger impact to you.

I’m going to start with the fabric hardware announcements and I’ll save the the really cool UCS-Manager announcements for last.

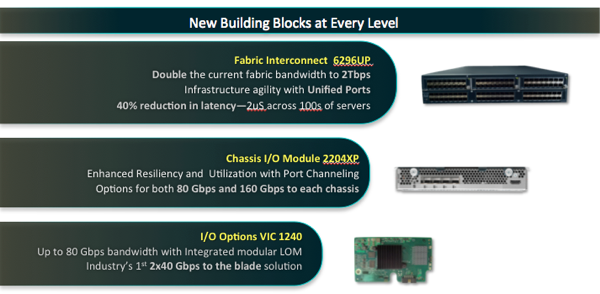

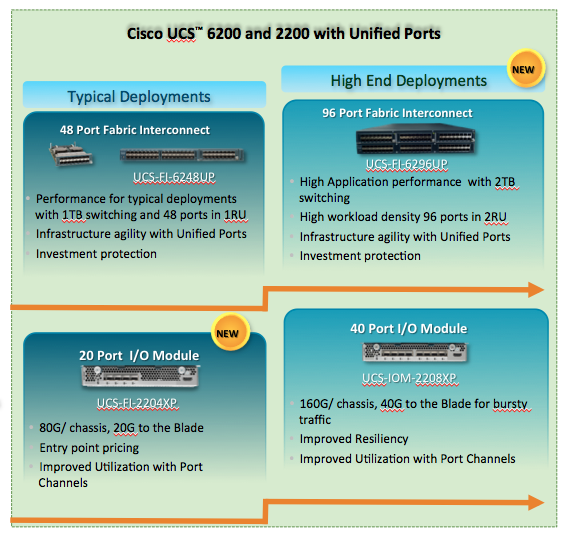

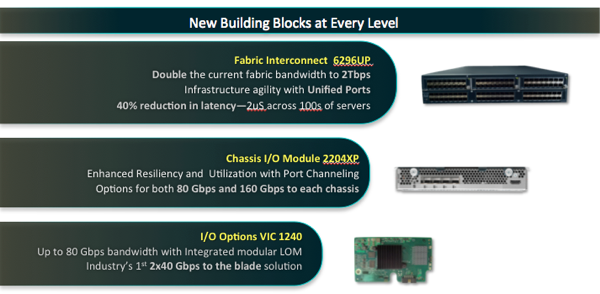

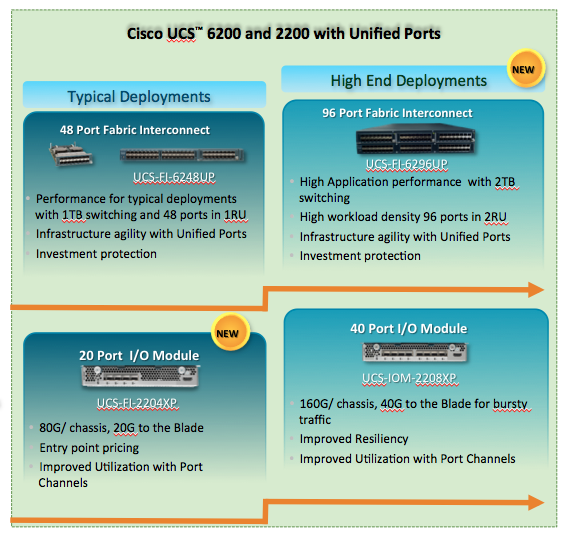

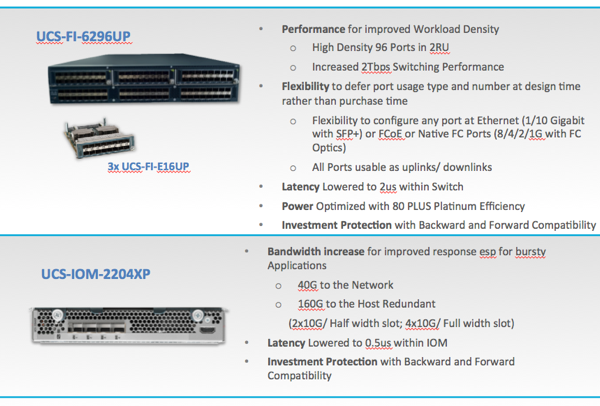

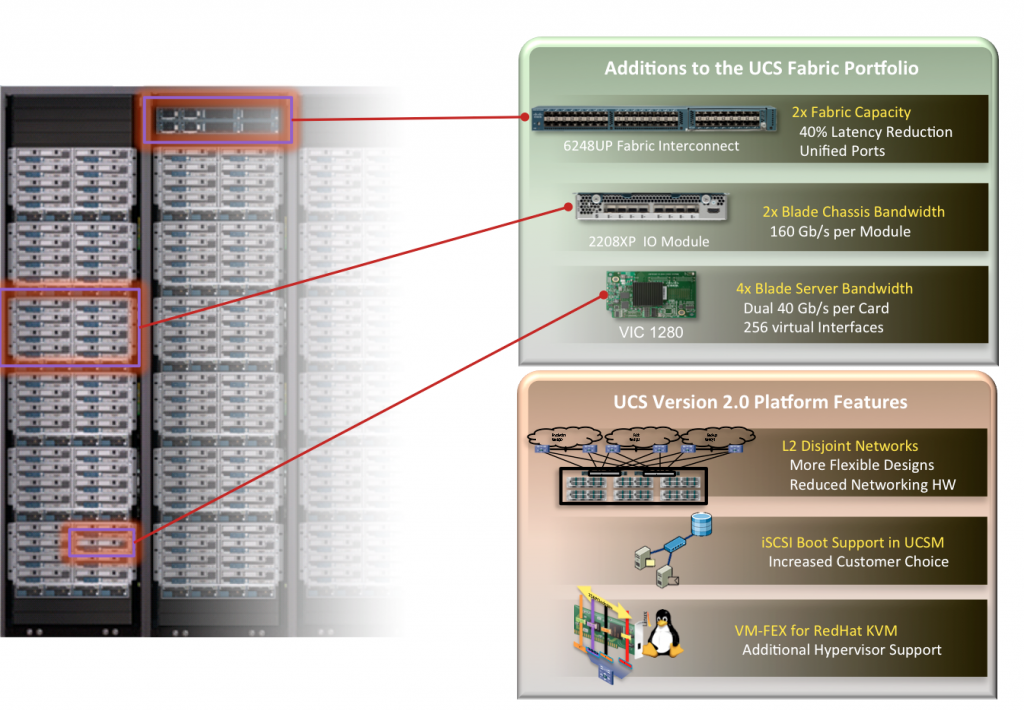

The new 6296UP completes the 6200 family of Fabric Interconnects (announced last year at Cisco Live in Las Vegas) and brings the port density to a level that allows customers to build UCS Domains that contain 160 servers (rack or blade) and simultaneously have high bandwidth. You might recall in the previous 6100 series of Fabric Interconnects there was a trade-off between density in blades versus bandwidth. That is now a thing of the past.

The new IO Module 2204XP is in my view going to be the de-facto replacement for the current 2104XP IO Module. It brings all of the feature benefits of the 2208XP but at a price point that is close to that if the existing 2104XP. With 4 * 10Gb uplinks, it provides the same bandwidth as the current 2104XP. However, in combination with any of the 6200UP series Fabric Interconnect that 40Gb bandwidth is now delivered in a port-channel. Bandwidth to the individual blades is doubled, from 1*10Gb on the 2104XP to 2*10Gb on the 2204XP.

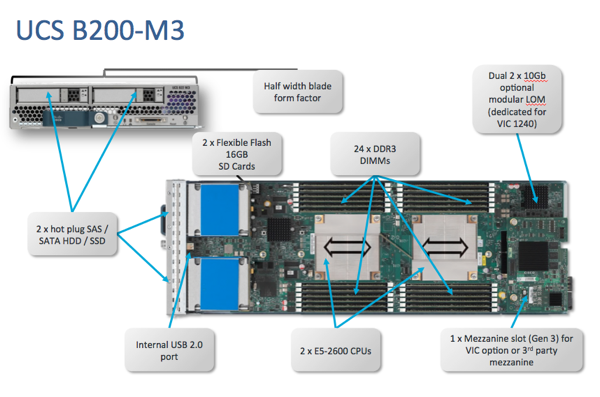

The new VIC1240 is a new form factor not seen before on the UCS Blades, it is a modular LOM specifically designed for the new M3 blades that were announced this week. Functionally the VIC1240 is the same as the VIC1280, they share the same ASIC’s, and both provide 2*40Gps to the blade (each 40Gb consists of 4 * 10Gb that are automatically port-channeled when the chassis contains one of the 2200XP series IO Module).

Here is the complete family overview.

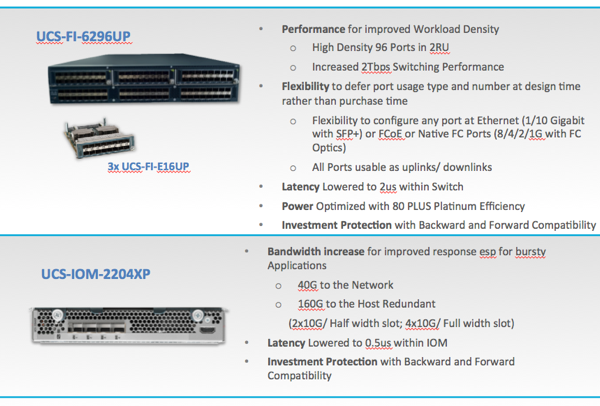

A bit more details around the 6296UP.

One of the two key functionalities are the Unified Ports. Just like it’s smaller brother the 6248UP and the Nexus 5548/5596, the new 6296UP Fabric Interconnect is Unified Ports enabled on all ports.

Every port is capable of being an Ethernet (1/10Gb) or FCoE or Native FC Port (1/2/4/8G) simply by providing the right optic. Selecting the role of a port is a software setting that will require a reboot of the Fabric Interconnect (or of the expansion module if you are only changing the port on an expansion module) so it is relevant to think up-front about how you want to divide the ports on the Fabric Interconnect. The advise is to Start with Ethernet from port one and work up – start with FC from the highest port and work down.

The second key functionality is the port-channel capability to the 2200XP series of IO Modules. In the first generation Fabric Interconnect and IO Module the only option was static pinning. This gives predictability in terms of bandwidth that blades have available during failure conditions – but customers asked for more flexibility. The 6200UP, when connected to a 2200XP IOM, supports a single port-channel to the IO Module.

A bit more details around the 2204XP IO Module

Latency is reduced not just in the IO-Module but also in the 6200UP Fabric Interconnects. For those customers that felt that latency was a potential problem, the new platform delivers low consistent latency across 160 servers. If latency matters, usually the consistency of latency is even more relevant as the actual latency – with VM’s you never know where a VM might move to. Blade to Blade latency is consistent inside UCS at 3us (0.5 us for IOM, 2us for FI, 0.5us for IOM).

The 2204XP provides 16 10Gb ports to the 8 blades inside a chassis delivering up to 20Gb of bandwidth from a single IOM to a blade. No production system should be running using a single fabric, meaning a total of 40Gb of bandwidth can be delivered to a single half-width blade with the 2204XP.

The actual details behind how that works is a topic for an other blog post as it will introduce you networking on the new M3 blade as well as the current M2 blade.

UCS-Manager

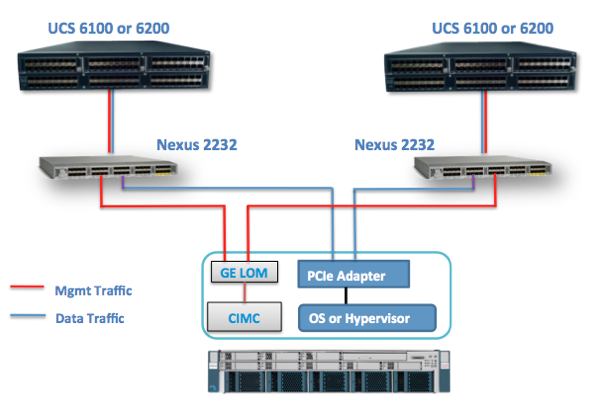

The UCS Manager software received an upgrade to version 2.0.2. This might sound like a minor release, but actually delivers the hardware support for all the new components talked about earlier, as well as support for the new M3 blades and the Nexus 2232.

I can hear you ask “What does a Nexus 2232 have to do with UCS?”

In version 1.4 Cisco introduced the concept of adding a specific UCS rack server to the UCS Domain, managed by UCSM. The implementation at that time left room for improvement – it didn’t scale and it wasn’t consistent between blades and rack servers.

UCSM 2.0.2 and the Nexus 2232 change that. Now a single UCS Domain can be 160 servers (UCS blade or UCS rack servers). All of the benefits UCSM provides to blades now become available for UCS rack servers. And in the process the type of supported UCS C-series servers goes up to the full current shipping family and the newly announced M3 series of rack servers.

I can still hear you ask “Ok, but what does that have to do with a Nexus 2232?”.

The Nexus 2232 is a Fabric Extender and is part of the Nexus 2200 series of Fabric Extenders. The IO Modules that go into the back of a UCS chassis are Fabric Extenders as well. They are part of the Nexus 2200 series as well – hence the product numbers 2204XP and 2208XP.

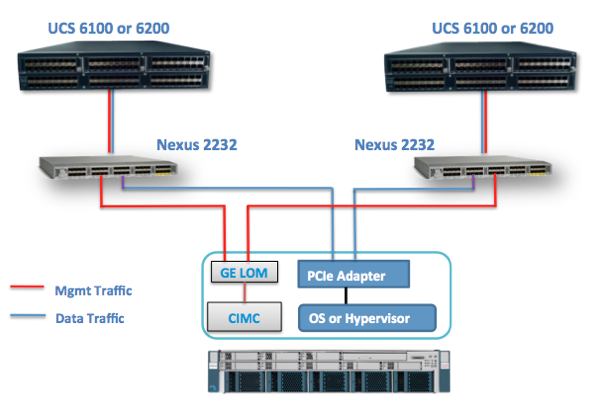

All the blades in a UCS chassis connect to the IO Modules. Both with their data interface as well as with their management interface. That all happens of course through the mid-plane in the chassis which means you don’t see those connections.

The Nexus 2232 brings the same architecture to the UCS rack servers. The servers have both a data and management interface that connects to the Nexus 2232, which itself is connected to the 6100 or 6200 series of Fabric Interconnects (just like any IO Module is).

This is what allows a single UCS Domain to now contain 160 UCS rack servers, and you can create some exciting solution with that – stay tuned for that.

Recent Comments